Modern startup teams love to talk about AI-powered workflows. If you're in a 5–20 person company trying to ship faster, you've probably added automation or a "smart" tool to relieve the pressure. On paper, it makes sense: let AI handle the busywork so a small team can move faster without hiring.

In practice, things get messier.

The initial productivity bump rarely lasts. Beneath the demos and early wins, hidden costs start to pile up. Work feels more fragmented, coordination gets harder, and progress stalls in ways no dashboard tracks. AI often ends up accelerating a broken system instead of fixing it.

The uncomfortable truth is that AI doesn't repair broken workflows, it exposes them. And once teams have invested time, effort, and belief into a setup, they tend to double down rather than step back. That's how sunk costs take hold, rebuild loops begin, and workflow debt quietly accumulates. This article looks at why that keeps happening, and what it actually takes to break the cycle.

Key Takeaways

- Most teams don't walk away from broken AI setups. They double down, because too much time and effort has already been sunk.

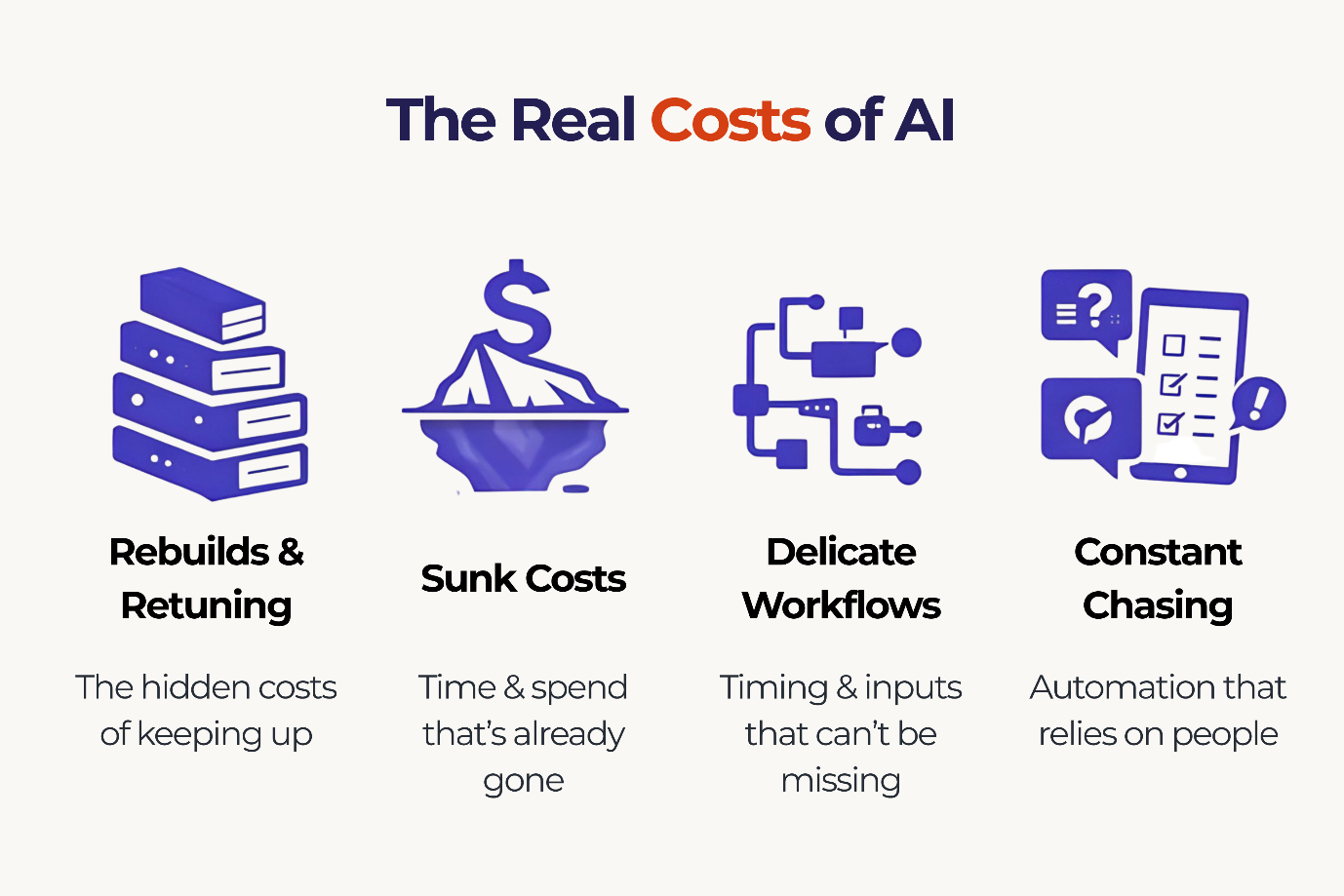

- The real cost of AI isn't the licence. It's the rebuilds, retraining, and coordination work no one ever counts.

- Sunk cost quietly drives AI decisions long after it's obvious the workflow isn't holding up.

- Workflow debt builds when automation depends on perfect timing, constant availability, and people behaving predictably.

- AI only creates durable value when workflows are built to adapt as teams, context, and conditions change.

Why teams keep investing in broken AI

AI is everywhere. Real results… much less so. Most companies are experimenting with AI in some form, but many leaders still can't point to clear revenue gains or cost savings. And yet teams keep piling on more tools, more automations, more experiments.

Why? Hype, mostly. And fear.

We've all seen the promises, 30%, 40%, even bigger productivity gains. Some teams do see an initial spike in output when AI rolls out. That's enough to make no one want to be the team that "missed the wave."

But those early wins don't always hold. Upwork's research shows that many employees who report productivity gains from AI also report higher levels of burnout. Work speeds up, expectations rise, and suddenly the team is running hotter just to keep up.

Still, very few teams stop. By that point, they've paid for licences, spent weeks integrating tools, trained models, built prompts, and rewired workflows. Walking away feels like throwing progress away, even when progress is questionable.

I've spoken to founders who know their AI setup is fragile. Data pipelines break. Outputs need constant cleanup. Workflows stall the moment someone logs off. But they keep pushing, hoping the next version will fix it.

That's not optimism. It's sunk cost. And it's one of the quiet reasons AI adoption keeps growing while real productivity gains lag behind.

The sunk cost fallacy trap

The sunk cost problem isn't complicated. It's the quiet thought that says: "We've already put too much into this to stop now."

In AI, that thought is everywhere. Teams spend months onboarding a tool. Training it. Tweaking prompts. Bending workflows around its limitations. Eventually it does something, maybe 10–15% of the job. Not great, but not useless either.

And that's where things get dangerous. Because once a system is "good enough," walking away feels worse than sticking with it. Even when a newer option could do far more with far less effort, it's hard to admit that the last six months didn't really move the needle.

I've seen this play out repeatedly. Founders know their AI workflows are fragile. Outputs need constant cleanup. Automations break the moment reality shifts. But they keep going. Not because it's working, but because stopping would mean admitting it never really did.

So teams tolerate clunky workflows. Manual handoffs. Half-automated processes that only work on a good day. Licences renew. Engineers keep patching. Complexity piles up. What looks like momentum is often just inertia.

The Sunk Cost Fallacy: Workflow debt builds when automation can't adapt to missing context, delays, and real team behaviour.

And the irony is brutal: by clinging to "okay" AI, teams burn more time and money than if they'd cut their losses early. The system never quite delivers, but it's expensive enough, in effort and ego, that no one wants to pull the plug.

The simplest question most teams avoid is also the most useful one: If we weren't already invested in this setup, would we choose it today?

If the answer is no, the problem isn't the model. It's the cost of pretending progress is happening.

Invisible rebuild loops and workflow debt

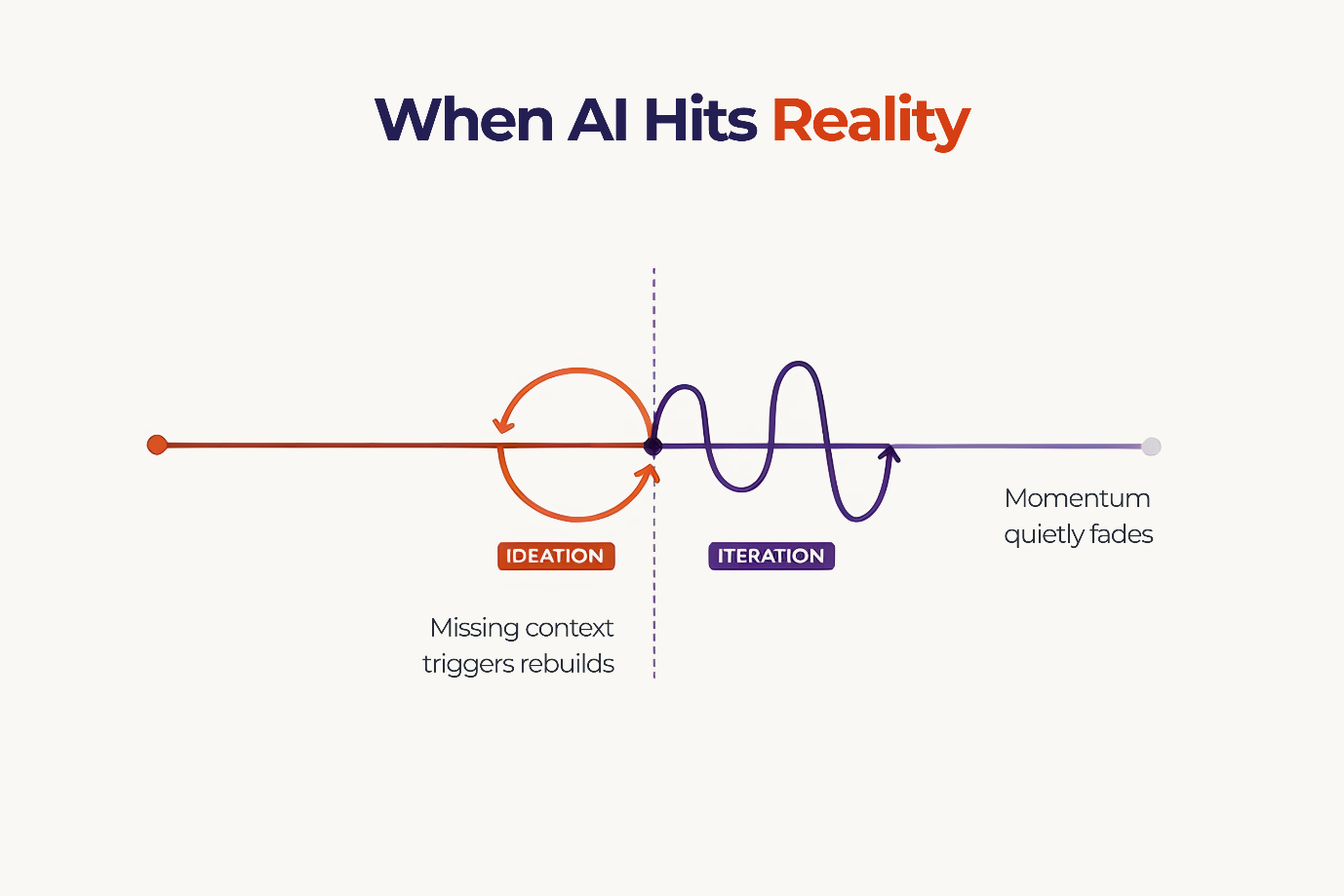

Even when teams don't fully abandon a tool, they usually fall into a quieter trap: rebuild loops. The workflow isn't fundamentally sound, so people keep patching it, manual checks, edge cases, "quick fixes" that somehow become permanent. The automation still exists on paper, but it needs constant supervision. That invisible effort compounds. This is workflow debt: operational drag that builds interest over time, much like technical debt.

The pattern is familiar. A workflow looks clean in a diagram, until it depends on a person. Let's call him Bob. Bob holds a key piece of context. He's in another time zone. Or in meetings all day. Or just not available yet. The AI workflow still tries to move forward, but the information isn't there. So it stalls. Or worse, it ships with gaps. Later, someone has to step in, rerun the workflow, fix the output, and explain what changed. The work gets done twice. No one planned for it. Everyone feels it.

This isn't an edge case, it's daily reality. McKinsey reports that employees spend 1.8 hours every day, over nine hours a week, just searching for information. Put differently, companies hire five people, but only four are doing productive work; the fifth is off hunting for answers that should already exist.

Over time, these small rebuilds pile up. Teams don't notice because it looks like normal work: extra checks, late fixes, rework squeezed into the margins of the day. But zoom out and the cost becomes obvious: slower delivery, repeated effort, and growing fatigue. Gartner predicts that over 40% of agentic AI projects will be cancelled by the end of 2027, not because the models fail, but because the workflows and operating assumptions behind them don't survive real-world change.

That's the real cost of AI most teams never budget for. Not the licence. The debt. The rebuilds. The quiet erosion of momentum when systems can't adapt to how work actually happens.

The real cost of AI isn't the licence. It's the rebuilds, retraining, and coordination work no one ever counts.

Adaptive workflows: Reducing drag and fatigue

If there's one lesson teams keep relearning, it's this: you can't bolt AI onto a broken workflow and expect productivity to magically appear.

Real gains don't come from smarter models. They come from workflows that can bend when reality changes. People go offline. Information arrives late. Decisions pause. Adaptive systems expect this. Rigid automation doesn't, and it breaks the moment conditions aren't perfect.

The research backs this up. McKinsey found that only 21% of companies actually redesign work around AI. Those teams saw dramatically higher productivity. Everyone else is stuck running pilots, rebuilding workflows, and slowly losing trust, burning credits and credibility in the process.

The difference isn't ambition. It's design.

Adaptive workflows treat missing information as normal, not as failure. If Bob hasn't replied yet, the system doesn't stall or guess, it waits, reroutes, or flags the gap. No silent failures. No double work later. We learned this the hard way and started building explicit "waiting" states into our own workflows. Progress didn't speed up overnight, but it stopped collapsing.

Good workflows also surface context where the work happens. Most rework comes from hunting for the "latest" doc, the right thread, the missing decision. When knowledge is attached to the workflow instead of scattered across tools, execution gets lighter. That's how you pay down workflow debt instead of compounding it.

And here's the part most AI demos ignore: humans aren't infinitely elastic. If your system assumes people will sprint faster just because AI can, you're designing for burnout. Adaptive workflows respect timing. If something finishes at 10pm, it can wait until morning. That's not softness, that's sustainable performance.

This thinking is what led us to build Ayven. Not another tool. Not another dashboard. An agent designed to work the way teams actually do. Pausing when context is missing, picking back up when it's available, and keeping work moving without constant babysitting.

The hidden cost of AI isn't the licence. It's the rebuilds. The rework. The quiet exhaustion of fixing "automated" systems that never quite close the loop. Those costs don't have to stay hidden. If AI feels like it's slowing your team down, don't reach for a better model. Rethink the workflow. That's where the real leverage is.

The real takeaway

AI doesn't create workflow debt. Reality does.

Distributed teams, async work, shifting priorities, and human unpredictability aren't edge cases to be engineered away. They're the default conditions most teams operate in. The problem is that many AI systems are built as if those conditions don't exist, assuming perfect information, stable ownership, and uninterrupted execution.

That's where the hidden costs pile up.

Sunk costs form when workflows break and teams feel compelled to rebuild instead of rethink. Rebuild loops emerge when automation depends on brittle assumptions. Over time, that drag compounds into workflow debt — work that looks automated on paper but quietly consumes more time to maintain than it ever saved.

The future of productive AI isn't about generating more output, running faster models, or layering tools on top of broken systems. It's about designing AI that understands collaboration, respects timing, and adapts when work inevitably changes. That's when rebuilds slow down. That's when sunk costs stop accumulating. And that's when AI finally starts doing what it promised: helping teams move work forward, instead of weighing them down.

What sustainable work actually looks like

If you work in a distributed, online-first team, this is worth pausing on.

Does your async setup actually work when people are offline, busy, or in different time zones? Or does progress quietly depend on everyone being available at the same time?

The next generation of high-performing teams won't be defined by longer hours or faster output. They'll be defined by systems that keep work moving without constant chasing, rework, or context loss.

That's the problem we're focused on at Alknoma — building automation that adapts to real team dynamics, instead of forcing teams to adapt to brittle workflows.